Joseph M. Honea

Director of Software Engineering

ABSTRACT

Implementation of a Digital Engineering framework is heavily reliant on seamless access and manipulation of a variety of data sources produced in various environments. Additionally, a digital engineering initiative must be capable of distributing this data through network topologies effortlessly and securely independent of proprietary software. The overall objective of this research was to identify the best data distribution framework for use in the development of a next-generation test, measurement, analysis, and reporting capability. Open data distribution framework protocols including but not limited to MQTT, (i)DDS, OPC UA, and ZeroMQ (ZMQ) were evaluated. ZeroMQ was selected for its versatility, simplicity, and superior performance.

Furthermore, it enabled straightforward publication and subscription processes, accommodation of multiple data types, and distribution efficiency restricted primarily by hardware constraints. The ZMQ transport protocol is lightweight and implements a standard wire protocol for constructing distributed frameworks in a multitude of networking patterns. Integrating this standard distribution framework with other universally known standard formats such as JSON, JSON-Schema, and a simplistic data format; an open framework supporting informational and command exchange between systems with data publish/subscribe mechanisms was created. This removed the need for proprietary libraries or SDKs and provided a completely open solution. Unfettered access to data and the means to manage and control data systems in any language or platform was realized. Comparing the implementation of ZMQ with other protocols, a marked reduction in complexity, diminished overheads for data engagement, and amplified throughput for data-intensive systems was observed. ZMQ provides encryption capabilities and the potential for implementing user access controls when needed. Moreover, it provides a robust and straightforward solution for crafting distributed systems and offers open, performant, and secure distributed data. This paper will summarize the evaluation of ZMQ against the other distribution protocols and its implementation in a next-generation test and measurement solution.

INTRODUCTION

As digital engineering frameworks are being developed and implemented across industries, there must be a common methodology for the secure and efficient exchange of data. This data exchange allows for multiple systems responsible for independent data actions and insights to be able to interoperate with one another seamlessly. Additionally, users must be able to easily access the data to make customizable and proprietary insights. The data being produced and consumed must be able to be independent of any proprietary software constraints of the distribution technology.

In working to provide software solutions to meet these objectives, various common and openly available protocols and solutions were researched and evaluated during the course of a two-year period. This research aimed to identify the optimal data distribution framework for developing a next-generation test, measurement, analysis, and reporting capability. Each evaluated protocol was assessed based on a comprehensive set of metrics.

This paper will present the evaluated protocols and provide the general results for their evaluation. Given the results of the evaluations and additional research, this paper will elaborate on the chosen solution as well as the combination of technologies used to create a robust solution. Finally, the benefits of such a system in a modern, next-generation environment will be discussed.

I. Technology Evaluations

To provide a solution that meets the needs of a readily available and open standard for data distribution, many different libraries and products were evaluated. Given the scope of this paper is not large enough to discuss all evaluated, the candidates displaying the highest prospect for success were MQTT, (i)DDS, OPC-UA, and finally ZeroMQ. These protocols were evaluated in the literature and in published case studies performed for overall performance. Where case studies were not available, independent tests were performed. Finally, each candidate was downloaded and assessed for usage and integration in various platforms and languages. Overall, the following set of criteria was used to evaluate all candidate protocols:

- Cross platform and cross language availability

- Support for 1:1 and 1:N connections for request/reply and publish/subscribe patterns

- Open API access

- Ease of API usage

- Asynchronous communication supported

- Support for encrypted and/or secure access of data

- Overall Performance

Each of the candidate systems are message oriented publish/subscribe middleware systems. They are also widely used in various aspects; however, many have not been evaluated as a centralized data distribution system specifically for a digital engineering framework with time critical distribution requirements and large subscriber counts.

a. MQTT Evaluation

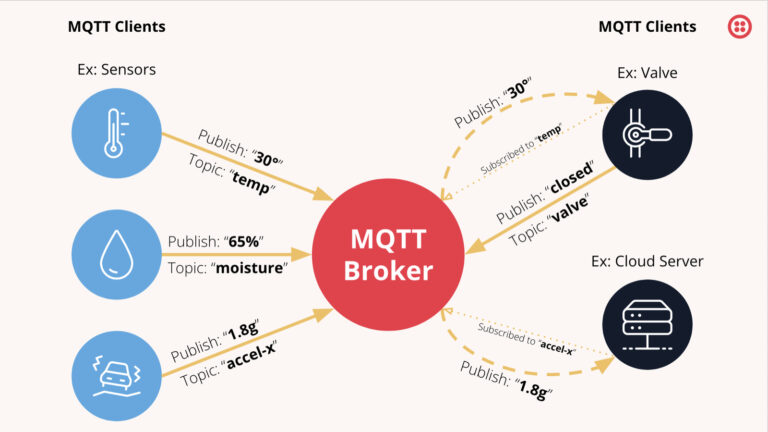

MQTT is a broker-based message queue protocol primarily used in the Internet of Things (IoT) for efficient transfer of data between systems given limited bandwidths. MQTT was developed in the late 1990s by Dr. Andy Stanford-Clark of IBM and Arlen Nipper of Cirrus Link to address a need for a machine-to-machine communication protocol in environments with unreliable or constrained networks. It was initially used to monitor oil pipelines using satellite connections. The protocol is widely used today for seamless data exchange between sensors, devices, and applications across various industries such as automotive, manufacturing, and telecommunications. Given its light-weight and efficient data transfer, it is heavily used in the transfer of low-rate data from sensors to cloud systems. It is also the primary protocol for connecting devices to the Azure cloud systems for IoT monitoring and Azure digital twin data connections.

Because MQTT is a broker-based protocol, publishers such as sensors publish to a broker. The broker processes the message, alerts subscribers of data availability, and provides the data for the subscribed topics. This system works well but has been found to be constrained for lower-rate data systems. As message sizes increase or the publishing rate increases, the data availability to the subscriber grows in latency and decreases in overall throughput. Given the broker nature of the system, data cannot be reliably seen in “real-time” and is heavily dependent on the capabilities of the broker. Additionally, as the broker begins to be unable to keep up with the publishers or its buffers begin to fill, the broker will block and drop additional incoming data packets. Therefore, the performance is lacking for the needs being evaluated here-in. However, the protocol would be a good solution for providing summary information of higher rate systems to cloud-based systems given its current wide support in this area. Additionally, it was found the API is fairly simple to use and widely available across software stacks and platforms.

B. (i)DDS EVALUATION

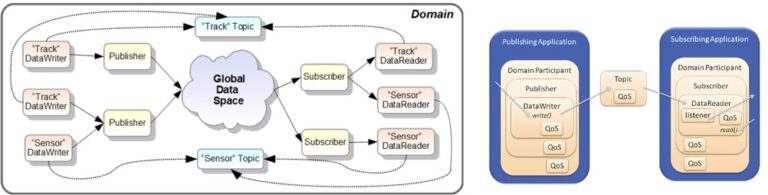

DDS was the next system to be evaluated. DDS stands for Data Distribution Service and is a protocol developed by the Object Management Group (OMG) in the early 2000s. DDS is essentially a specification with various companies providing implementations. Each one varies in performance, features, and ease of use with the requirement to be interoperable with one another per the DDS specification. During the evaluation, OpenDDS, provided by OMG, RTI’s DDS implementation, and FastDDS were evaluated. DDS was developed to address the need for high-performance, scalable, and interoperable communications in complex, real-time environments. The system uses a publish/subscribe model and is generally defined as being data-centric as opposed to being message-oriented. In this system, publishers create topics and send data samples to subscribers, which receive the data samples they subscribe to. The DDS publishers and subscribers must be aware of and support the defined data types/structures being produced/consumed. Therefore, any data definition can be created through an Interface Definition Language file (IDL). Additionally, DDS employs a QOS (Quality of Service) policy system which defines what actions occur based on the quality of the distribution service. Examples are maximum publishing rate, allowed latency, and other parameters. DDS also employs multicast as the transport protocol. By utilizing multi-cast, it does not require a broker. These are favorable characteristics.

Regarding overall performance, the DDS system was capable of producing data at a high throughput even with larger data payloads. It performed best with a 1:1 connection scenario. However, when multiple subscribers were connected, the performance was reduced by a factor proportional to the number of subscribers connected. This is believed to be caused by the publishers forwarding data to the subscribers one by one, and high overhead related to data packetization. The latency of DDS was observed to be relatively low but fluctuated slightly as the publishing rate increased. Latency also increases with payload size.

The DDS Libraries are large and complex. Customization requires substantial familiarization, and implementation of an IDL file for your data, definition of a QOS policy, and writing a publisher or subscriber using these libraries. The DDS package has a large set of executables and libraries and requires large overhead for implementation. Given the complexity of the specification and the available implemented libraries, creating systems capable of data exchange without involving the companies that publish DDS packages is quite difficult. This restricts true openness due to sheer complexity and reliance on proprietary software development kits (SDK).

One specific variant of DDS, iDDS was evaluated. This variant was developed by and is available from MDS Aero and is meant to be specific to instrumentation. It successfully provides a means of abstracting away much of the complexity of DDS by requiring conformance to the iDDS IDL file and its supported QOS policies. However, through investigation of the library and employed installs, iDDS is primarily suited for low-rate data and not for high-rate, high channel count systems.

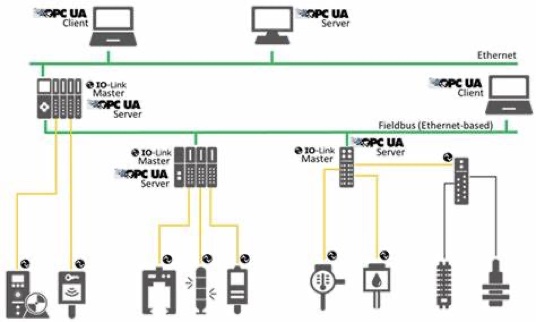

C. OPC-UA Evaluation

The Unified architecture protocol was developed by the Open Platform Communications Foundation (OPC) in the early 2000s. This is an IEC standard that builds on top of COM. It is made to be a platform-independent solution compared with COM which was a Microsoft Windows-only solution. It is a service-oriented protocol and supports both client/server and publish/subscribe models. OPC-UA provides standardized data models that are freely available for over 60 types of industrial equipment as part of the companion specification documents. The system provides for extensible security profiles, security key management, and can map to multiple communication protocols such as TCP/IP, UDP/IP, WebSockets, AMQP, and MQTT.

OPC UA consists of OPC UA servers and OPC UA Clients. There can also be a specialized OPC UA Server that will provide data to cloud systems. This of course requires OPC UA compatible devices or devices that have implemented the server on-board for data distribution. OPC UA is heavily utilized in industrial settings with many different types of devices. However, these device types would be considered low-rate devices. No examples were found where OPC UA was being used for dynamic data. Additionally, OPC requires membership in their organization for free access to protocols and implementations. Libraries are made available, but with licensing constraints that make it difficult to integrate into commercial platforms. In addition, the most common SDKs are complex and require a large overhead for integration into systems.

The evaluation of OPC-UA concluded that it would not be a beneficial data distribution system because implementations are primarily directed at devices, and are not suited for general data. OPC-UA provides support for handling high-rate data, but the service degrades with higher channel counts and requires a large quantity of OPC-UA servers for supporting multiple device types. Given these restrictions, the platform was not further pursued.

D. ZeroMQ EVALUATION

ZeroMQ, also known as ZMQ or 0MQ, was created by Peter Hintjens who authored the AMQP and OpenAMQP protocols widely used in distributed financial networks. AMQP is another broker-based protocol which worked well for financial networks but could not support the demands of a high performant distributed data protocol. This led to the creation of ZeroMQ. ZeroMQ is backed by iMatrix and supported through a large and open community. ZeroMQ is brokerless and can provide high performance because of its low-level implementation. The main library for ZeroMQ implements the defined ZMTP wire protocol and is made available in a wide variety of languages and platforms. It also supplies a simple, easy-to-use interface abstracting away much of the complexities with network communication, error handling, auto-reconnects, and operating system-specific nuances. The library implements a wide variety of transports and topologies making it easy to adapt to the user’s needs. These include TCP/IP, UDP/IP, MultiCast, IPC transports and the Request/Reply, Publish/Subscribe, Pipeline, Exclusive Pair, Dealer/Router, and Push/Pull network topologies. Additionally, ZeroMQ supports user authentication as well as data encryption for streaming and access.

Results of this evaluation showed that ZeroMQ provided performance equal to DDS for single subscribers and better performance when handling multiple subscribers. Latency and throughput are affected with ZeroMQ when payloads are larger in size. Keeping payloads lower than 1 Kilo-Byte, or breaking up larger payloads into smaller quantities results in very low latencies and high throughputs in low and high publish rates.

E. Summary

After evaluating the aforementioned protocols, ZeroMQ was ultimately chosen due to its performance, ease of use, and flexibility. A performance summary for the metrics used is shown below:

II. EXTENDING ZeroMQ

ZeroMQ is for the most part a low-level implementation. It does not provide many of the extra features of DDS, nor does it define its own set of data abstractions. However, it does provide an easy and maintainable interface for providing and building distributed data solutions. Given this, to provide an adequate solution, we needed to extend ZeroMQ by making the ZMTP the main protocol for the wire and topologies, and creating a simple interface for common data abstractions. To do this, and remain in the “open” ideology, we needed to incorporate common and well-known standards. Among these was JSON. JSON (Java Script Object Notation) is a standard structure for human readable data sets. We utilized JSON in the implementation for 3 main aspects of the system. These are:

- Informational exchange: The exchange of information between 2 network nodes providing settings and general information.

- Request Structures: Used for clients to make requests to the publishers for specific information or data.

- Command Structures: Used for clients to send commands to the publishers

The formats for these 3 aspects are defined in a JSON-Schema. JSON-Schema is another standard definition and is used to validate format compliance for the structure exchanges between systems. After defining the JSON information exchange, the data format and extractions needed to be defined. Here, a basic binary packet structure is utilized referred to as a data message. The message structure contains header information describing the type of data held within it, timestamp information, references to where the data was created, and the data itself. This data structure is simple and easy to use. Additionally, we are making additions to provide custom data types where the type information is available in a JSON-Schema and referenced in the data message.

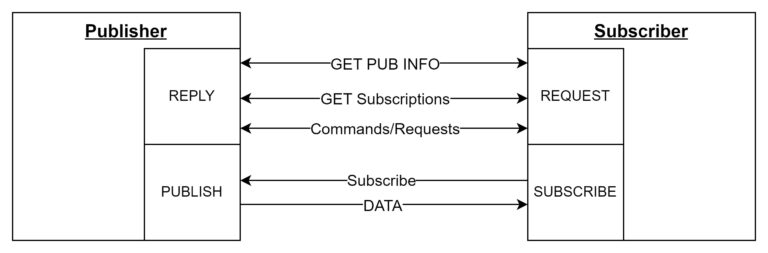

The system works by providing a publisher and subscriber. The publisher will utilize two ZeroMQ sockets. The first socket is the Reply socket. This socket is responsible for providing responses to requests or commands from the client. The second socket on the publisher is the publish socket. This socket is a unidirectional connection where it only publishes data to subscribed clients. The client or the subscriber will connect to the reply socket of the publisher. This is the only interface openly available. After connection, the subscriber will make a request to get the publisher’s socket address. Once received, the subscriber can then subscribe to the data being published. Among other things, the subscriber can make requests to the publisher to retrieve additional information such as the available topics for subscription and settings used for the system being connected to. The diagram below shows this process:

The implementation of this system has been tested under the load of 800 dynamic data channels running at an average of 100 KS/s/ch. The publisher was receiving data for all 800 data channels and multiple subscribers were subscribing to the available data. A 10Gbps Intel Network Adapter and an AMD EPYC processor were used. The primary limiting factor observed during testing was the available network bandwidth. The ZeroMQ utilization requires very minimal overhead compared to all of the other candidates tested.

III. ZeroMQ AND OPEN PROTOCOL BENEFITS

Completing the implementation utilizing ZeroMQ as the main wire protocol, utilizing JSON for object definitions and informational/request/command exchange, and utilizing a simple binary data message structure was accomplished in less time than creating a similar solution using DDS. This highlights that the system is open, easy to interface with, and requires minimal implementation overhead.

Additionally, the entire system is built on open and available protocols and software stacks. ZeroMQ is available in multiple programming languages including C++, C#, Python, Go, Java, and there is even an implementation in Labview. Given that the wire protocol is easily accessible and usable, data abstraction is relatively simple given the JSON and the data structure being used. Python scripts to connect and subscribe to data were created in roughly 30 minutes. Finally, given this openness and the rise of generative AI, after some experimentation, we were successful in generating interfaces to the publisher and creating custom publishers purely generated with Microsoft’s Co-pilot LLM. Similar experiments were performed with the other protocols, with limited success possible with MQTT and OPC-UA. We were unsuccessful doing this with DDS or iDDS. The main reason was that there are multiple re-implementations of the same protocol and most are only available with paid subscriptions to them.

IV. CONCLUSION

In the rapidly evolving landscape of digital engineering, the need for a robust, open, and efficient data distribution framework is paramount. Through comprehensive evaluation, ZeroMQ emerged as the optimal solution for next-generation test measurement, analysis, and reporting systems. Its lightweight design, flexibility, and ease of use position ZeroMQ as a superior choice over other protocols investigated and tested. ZeroMQ’s ability to maintain high performance even with multiple subscribers, along with its open-access framework, ensures that it meets the stringent demands of modern digital engineering environments.

By integrating universally accepted standards such as JSON for data formatting, the framework not only simplifies implementation but also ensures interoperability across various platforms and languages. This open, extensible solution eliminates the need for proprietary software, thereby reducing complexity and cost while enhancing accessibility and scalability.

The successful deployment and testing of ZeroMQ within high-demand scenarios demonstrates its capability to handle extensive data loads with minimal overhead constrained primarily by hardware. ZeroMQ exceeds the necessary criteria for a future-proof, performant data distribution framework for engineering industries embracing a digital transformation.

REFERENCES

Barroso, C., Fuchs, U., & Wegrzynek, A. (n.d.). Benchmarking message queue libraries and network technologies to transport large data volumes in the ALICE O^2 system.

Dworak, A., Ehm, F., Sliwinski, W., & Sobczak, M. (2011). MIDDLEWARE TRENDS AND MARKET LEADERS. Geneva: Cern.

Kang, Z., Canady, R., Dubey, A., Gokhale, A., Shekhar, S., & Sedlacek, M. (2020). Evaluating DDS, MQTT, and ZeroMQ Under Different. Vanderbilt University, Nashville.

MQTT. (n.d.). Retrieved from MQTT: https://mqtt.org/

OpenDDS. (n.d.). Retrieved from OpenDDS: https://opendds.org/

ZeroMQ. (n.d.). Retrieved from ZeroMQ: https://zeromq.org/